Not an AI wrapper: What a real lending AI tech stack looks like

TL;DR: In lending, “AI wrapper” usually means thin model usage with weak governance and inconsistent accuracy on messy files. A real lending AI stack is built on domain training data, specialized models, orchestration, verification and audit-ready controls.

The term “AI wrapper” has become shorthand for a growing buyer concern: a vendor that appears to be a modern AI company in a demo but relies on generic models and manual cleanup once real borrower files are integrated into the system. In lending, that gap is expensive. It shows up as longer cycle times, higher defect rates, inconsistent decisions and elevated fraud and repurchase risk.

The challenge is that the label is often applied imprecisely. Many teams see “human-in-the-loop” and assume the vendor is papering over weak automation. In credit workflows, the opposite is often true. Human review can be the governance layer that makes automation safe, auditable and continuously improving.

So, how do you distinguish a wrapper from a genuine AI platform designed for lending? Not by the model name on the slide. By the inference layer, or infrastructure, behind the output.

Why wrappers break in lending

Wrappers tend to share the same failure mode: they perform well on clean examples and degrade on the messy files that define real-world underwriting. That includes mixed income types, self-employed borrowers, non-standard statement layouts, multi-entity businesses, handwritten or photographed notes and documents that do not match the application.

Those edge cases are not rounding errors. They drive the bulk of manual work, escalations and rework. They are also where misrepresentation and fraud are most likely to hide. If a system cannot handle the messy slice of the portfolio, it is not fit for decisioning at scale.

Five signals you are looking at a real lending AI stack

Use these signals as a framework to evaluate any vendor claiming “AI-powered” decision intelligence.

- Domain training data that compounds

A real lending stack is built on labeled, domain-specific data that is collected and refined over time. The differentiator is not access to a foundation model. It involves repeated exposure to financial documents, encompassing thousands of document types, edge cases and exceptions, along with a closed-loop mechanism to learn from mistakes and retrain the model. - Specialized models tuned for financial artifacts

General-purpose models can be impressive, but lending requires precision. A mature stack uses models tuned for bank statements, pay stubs, tax forms and related artifacts, with attention to layout variability, numeric conventions and multi-page context. The goal is consistent interpretation, not fluent text. - Model orchestration instead of model monogamy

No single model wins every task. Strong systems route work dynamically based on document type, field type and confidence profiles. Orchestration matters because a pay stub is not a bank statement and a cash flow field is not a name field. If a vendor cannot describe how it chooses the best model for each task, you are likely looking at a thin integration layer or AI wrapper. - Verification that checks work before it ships

Decision intelligence is only useful if it is trustworthy. Advanced stacks use verification patterns where outputs are cross-checked before results are delivered downstream. Some teams use multi-agent “maker-checker” designs, others rely on deterministic controls and reconciliation logic. The key is a repeatable mechanism that reduces silent errors. - Human-in-the-loop as governance and training, not as a backstop

In lending, human review is often the difference between “automated” and “audit-ready.” The strongest implementations define when humans intervene, what they validate and how their work creates labeled data that improves future accuracy. If human-in-the-loop is treated as an operational patch rather than a learning system, performance will plateau. Real AI must always be re-learning.

A buyer checklist to pressure-test the wrapper concern

Bring these questions into discovery. A wrapper will answer vaguely. A real platform will answer specifically.

- What is your strategy for domain training data and labeling over time?

- Which parts of the workflow use specialized models vs general-purpose models?

- How do you orchestrate models across document types and tasks?

- What verification controls exist before results reach underwriters?

- How is human review structured to improve accuracy, governance and auditability?

Also ask one question that reveals maturity fast: how the vendor manages inference efficiency and cost discipline as model options and hardware evolve. Teams that operate at scale treat efficiency as a product feature, not a line item.

A brief example of what a “real stack” looks like in practice

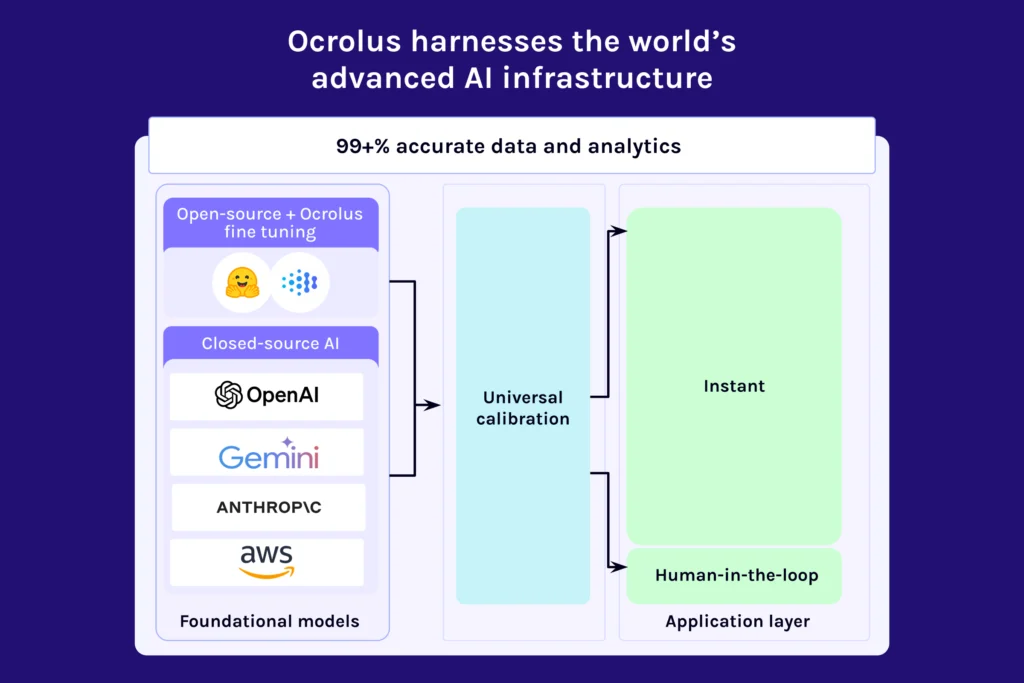

One way to make these concepts concrete is to look at how a vertical platform describes its approach. Ocrolus is an AI-powered workflow and data analytics platform built for lending-grade document and data analysis. Its positioning emphasizes specialized models, model orchestration, verification and governed human-in-the-loop quality assurance to produce audit-ready analytics at scale. If you’re interested in a deep dive on fine-tuned AI models for financial processing, check out our latest blog.

Key takeaways

- “AI wrapper” risk shows up in messy files, not curated demos

- Real lending AI stacks are built on domain training data and specialized models

- Orchestration and verification are the architecture signals buyers should demand

- Human-in-the-loop can be governance and a learning engine, not a workaround

- Ask about inference efficiency to separate mature platforms from thin layers